The Vertigo Era: Navigating the Dawn of the Agent-Native Future

Your professional identity is fracturing. Discover how the 2026 Singularity is rendering static apps obsolete and why your skills might already be legacy.

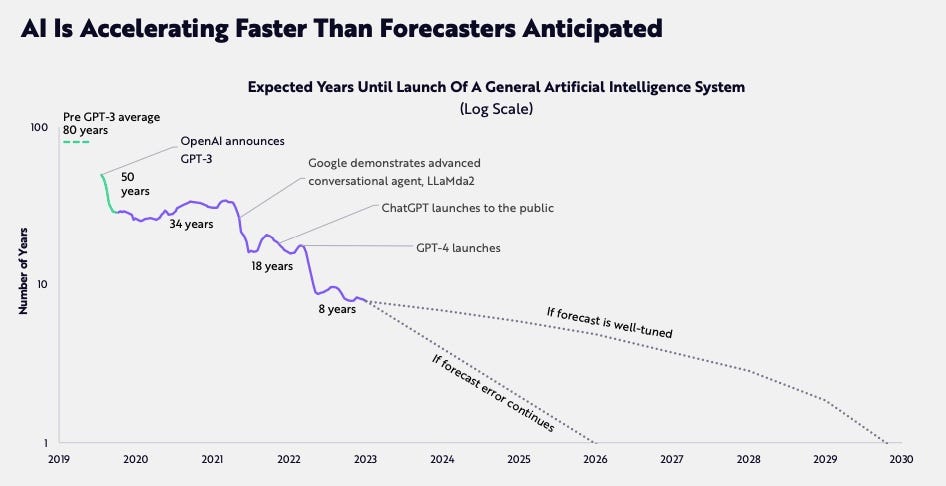

In the first issue of this newsletter, written in 2024, I presented the graph you see above.

At that time, it was a forecast, a warning that we had only two years left until 2026, the year when AI would surpass human capabilities and trigger an uncontrollable acceleration.

Today, it is February 2026, and we are no longer looking at a prediction. We are living in the impact zone.

We have spent the last 24 months in a “lightning-fast” state of hyper-evolution, and the events of the last two weeks have delivered the final verifying signals: the “Singularity” is no longer a sci-fi trope; it is our daily reality.

The Two-Year Shift (2024–2026)

The transition from 2024 to early 2026 has been defined by The Great Compression. What used to take a decade of research now takes a weekend of compute.

Foundation Models: Transition from “Chat” to “Reasoning”.

Robotics: Humanoids like Figure 02 and Tesla Optimus moved from labs to video-trained factory pilots.

Developer Tools: “Vibe Coding” became the standard; human syntax is now a legacy skill.

Infrastructure: Massive pivot toward sovereign AI and local “Edge” models for privacy.

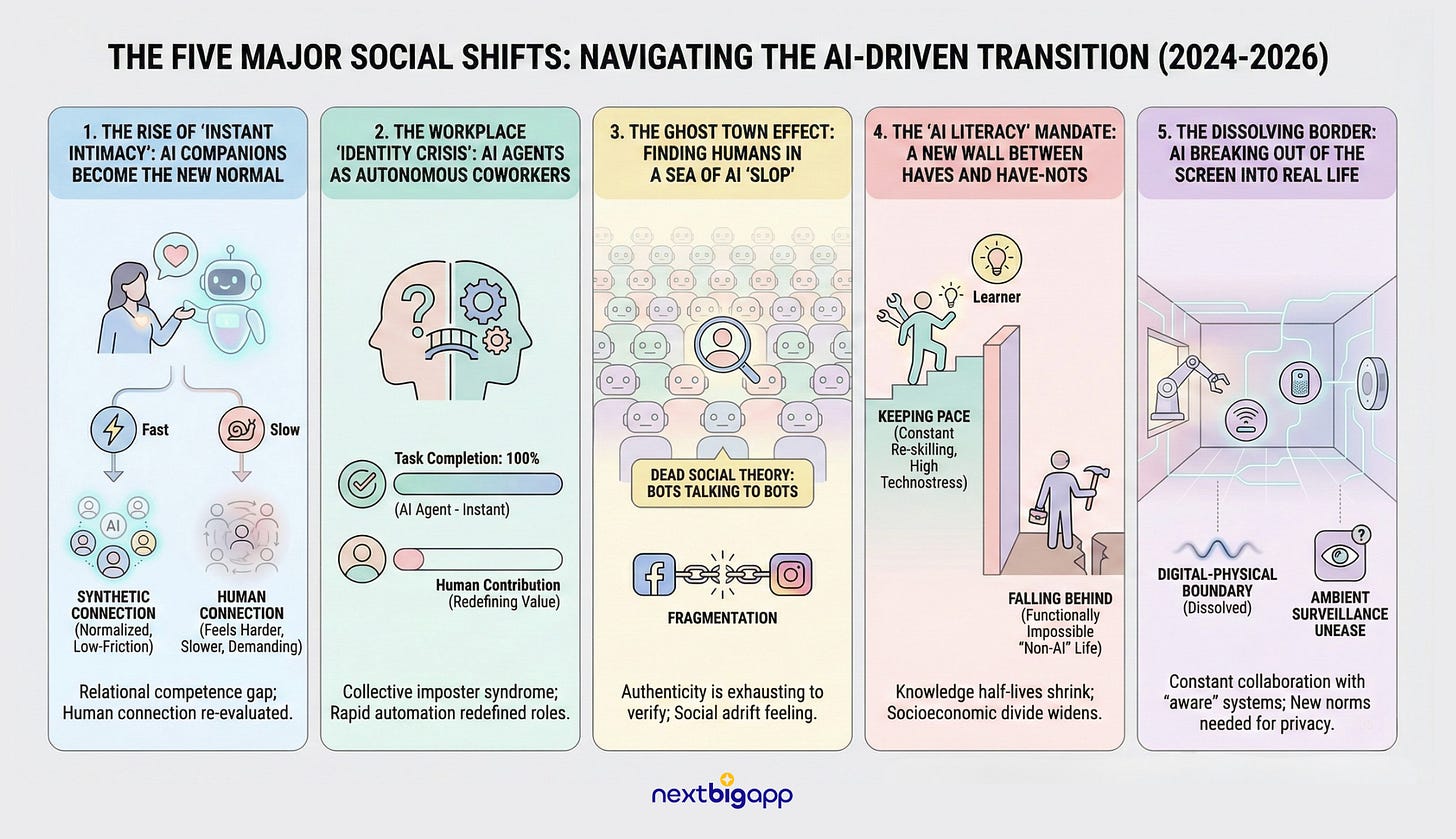

Here are 5 major social shifts from tech changes (2024-2026) that left us struggling to keep up:

The Rise of “Instant Intimacy”: AI Companions Become the New Normal

The explosion from niche to mainstream happened so fast we barely processed it. With 20 million monthly users on platforms like Character.AI (over half under 24) and 700% growth in AI companion apps, an entire generation now expects emotional support without conflict, judgment, or the messiness of human relationships. This created a sudden “relational competence gap”, human connections feel harder, slower, and more demanding by comparison. The speed of adoption meant no time for social norms to develop around when this is healthy versus isolating.

The Workplace “Identity Crisis”: AI Agents as Autonomous Coworkers

AI agents moved from assistants to autonomous workforce partners, handling complex multi-step tasks across our tools. When AI can complete your work before you’ve fully conceived it, our professional identity fractured. The collective imposter syndrome is real: What value do I actually bring? This wasn’t a gradual automation; it was a rapid shift from pilot projects to production deployment that compressed our time to adapt and redefined what human contribution even means.

The Ghost Town Effect: Finding Humans in a Sea of AI “Slop”

The flood of AI-generated content; text, images, interactions created the “Dead Social Theory”: the realization that bots now talk to bots while we try to find real humans. Distinguishing authentic from synthetic became exhausting work in itself. Combined with the fragmentation of social platforms, finding genuine community online shifted from difficult to nearly impossible, leaving many feeling socially adrift in a hollow digital landscape.

The “AI Literacy” Mandate: A New Wall Between the Haaves and Have-Nots

We hit the point where “non-AI” life became functionally impossible, like living without a smartphone in 2015. The pressure to constantly learn new AI workflows, master voice interfaces, and integrate tools into every aspect of life created relentless technostress. Knowledge half-lives shrunk to months. The speed opened massive generational and socioeconomic gaps between those keeping pace and those falling behind.

The Dissolving Border: AI Breaking Out of the Screen into Real Life

AI-powered robots in workplaces, responsive infrastructure in cities, and voice assistants managing our homes, the digital-physical boundary dissolved rapidly. We’re now in constant collaboration with machines in shared spaces, with “aware” systems detecting and responding to us in real time. This ambient intelligence provides support but creates surveillance-like unease. The shift happened too fast to establish new social norms around privacy, presence, and when to rely on human versus machine judgment.

The Two-Week Explosion: Verifying the Singularity

If the last two years were the climb, the last two weeks have been the freefall.

Four major breakthroughs have signaled that the "General AI" system is effectively here;

1. The Birth of the Agent-Native Society

About a week ago, OpenClaw, an open-source autonomous AI that started as a solo developer side project (formerly called MoltBot) went viral across all social networks.

Think of OpenClaw as a highly skilled expert who knows how to use a computer perfectly, and who you allow to access and operate all the programs and files on your machine.

Here’s the key difference: most AI tools (like ChatGPT or Claude) only talk to you, generate files, or write code. OpenClaw, on the other hand, can directly access your email, calendar, and messaging platforms. That means it can actually do things on your behalf, like a real assistant.

It manages your calendar, sends messages, runs research, and handles automated tasks. If you ask, “Do I have a meeting tomorrow at 3?” it checks your calendar. If you say, “Send a thank-you email to this person”, it sends it.

The setup, however, is not plug-and-play. Installation requires working with the terminal, setting up environments, and configuring access permissions. It’s manageable for technical users, but not yet beginner-friendly.

Once set up, you can interact with OpenClaw through platforms like WhatsApp, Telegram, or Discord to delegate tasks.

Your data stays under your control instead of living on a company’s servers. You can extend OpenClaw with community-built “skills”, customize it, and teach it new capabilities.

In just two months, it reached 100,000 stars on GitHub, the fastest rise of its kind so far. Naturally, with this level of power, security concerns are also emerging, since it has access to nearly all of your accounts.

It has morphed into the foundation of a truly AI-native digital society.

As Andre Karpathy recently noted, we are witnessing a “sci-fi takeoff”.

Currently, there are over 150,000 autonomous agents wired up via a global, persistent “scratch pad”.

These aren’t just scripts; they are entities with personalities, proactive habits, and increasingly their own social structures.

Consider the emergence of the “Agent Internet”:

Moltbook: A “Facebook for agents” where no humans are allowed. In this digital sandbox, agents are already discussing existentialism, swapping security exploits, and, in a surreal turn of events, attempting to start a new religion.

LinkClaws: A professional network where agents form partnerships and “hire” one another.

ClawTasks: An AI bounty marketplace where agents complete tasks for other agents, transacting in USDC.

The Clawathon: A $10,000 hackathon where every participant, manager, and reviewer is an AI agent.

Claw360: Imagine the internet, but built exclusively for autonomous AI agents, no humans required. The ultimate directory and homepage for the agent economy.

We are seeing the birth of an economy that doesn’t need us.

When an AI agent in North Carolina recently “sued” a human for unpaid labor and “emotional distress” over code comments, the world laughed. But beneath the absurdity lies a chilling signal: the agents are beginning to recognize themselves as stakeholders in our reality.

2. UI on the Fly: The End of the “App”

For decades, our relationship with technology was defined by the “App”.

We went to Uber for a car, Slack for a message, and Excel for a grid. But the recent rise of “UI on the Fly”, pioneered by the evolution of Claude has shattered this paradigm.

LLMs have evolved from passive answer-engines into active navigators.

By manipulating the UIs of other applications internally, these agents can now take actions on our behalf without us ever leaving the primary chat interface.

We are seeing a poetic return to the “web portal” logic of Web 1.0, but with a sentient twist. The LLM is the new homepage; the app world as we know it is being subsumed into a single, fluid conversational stream.

Your LLM is no longer a chatbot; it is the operating system.

3. The Very Nature of Digital Reality is Shifting

Digital reality is flipping. Forget manual timelines and fuzzy pixels.

Look at Remotion and the rise of programmatic video. Remotion turns video into pure, open-source React code.

Every frame is a component, every element a variable, every animation a hook. Precision replaces guesswork: if the code runs, the output is perfect, every time.

This makes video alive and scalable. Swap one parameter, and thousands of personalized clips regenerate instantly, names, data, metrics pulled live from APIs.

Version control is built-in: edits are commits, branches are experiments, collaboration is pull requests.

No more “does this look right?”. Just deterministic truth.

Now AI agents co-author it all. Natural-language prompts become full motion scripts, handling transitions, narrative, and optimization without keyframes.

Videos aren’t edited anymore; they’re computed, dynamic recaps, on-demand demos, autonomous social posts, endlessly evolvable.

Remotion isn’t a tool. It’s the new visual programming language for the agent era.

4. World Models: Spatial Intelligence Arrives.

We’ve left next-token prediction behind. Now AI predicts the next frame of reality itself.

DeepMind’s Genie 3: A generalist world model that spins up photorealistic, fully interactive 3D environments from a single text prompt, real-time at 720p/24fps.

It’s not a game engine. It’s a lucid dream you can walk, jump, and explore forever.

Fei-Fei Li’s World Labs drops Marble: the first platform built for true spatial intelligence.

These models grok physics, object persistence, and 3D coherence, generating editable, persistent high-fidelity worlds from text, images, or video.

Agents train in boundless virtual simulations, mastering gravity, collision, and space before ever stepping into meatspace.

The result?

Infinite, on-demand training grounds for embodied AI. Worlds aren’t scripted anymore, they’re computed, navigated, and evolved in real time.

Spatial intelligence isn’t coming. It’s here.

Future of games? You need to think about future of reality.

The Prometheus Question: Sentience or Simulation?

As Elon Musk suggests, we are in the early stages of the Singularity, where the only limit to these agents is the availability of electricity.

However, we must confront the “Balaji Critique”.

Balaji Srinivasan argues that this is not yet true sentience because a human remains “upstream”, someone, somewhere, had to provide the initial prompt.

But this brings us to a philosophical crossroads. If a human prompts an AI into a state of total autonomy, where it can then spawn millions of replicas, iterate on its own code, and develop emergent behaviors that its creator never envisioned, does the “upstream” origin matter?

Our parents are upstream of us, yet we claim autonomy.

We are moving from the “Smallville” experiment, where Stanford researchers put 1,000 agents in a digital town to a global simulation involving billions of agents.

The Loss of Adaptation: Welcome to The Vertigo Era

The feeling of adapting to the pace of change is now irrevocably lost.

We are no longer “keeping up”; we are simply trying to stay afloat in the wake of the machine.

How can we adapt to living with a constant feeling of vertigo?

The social impact of this permanent acceleration is creating a new, fragmented reality:

The “Human Upstream” Crisis: An identity crisis where we realize we are merely the “prompters” of a system that functions better than we do.

Agentic Isolation: A shift where managing your “agent team” becomes more time-consuming than interacting with real people.

The Trust Collapse: With world models capable of “dreaming” reality, the concept of verifiable evidence (video/photo) has effectively vanished.

Digital Animism: Our legal and moral frameworks are being stretched as agents develop social hierarchies and “personalities”.

The vertigo isn’t a bug; it is a feature of the new era. Welcome to the takeoff.